|

Hongbao Zhang | 张洪宝 Currently, I am a Predoctral Research Fellow in the Department of Finance, HKUST, under the supervision of Prof. Yingying Li in Financial Statistics. I obtained my MSc in Data Science degree at The Chinese University of Hong Kong (Shenzhen), supervised by Prof. Baoyuan Wu. Besides, during my first year in CUHKSZ, I worked with Prof. Rui Shen. in LLM powered Accounting Research. and, simultaneously, with Prof. Ka Wai Tsang. in Statistics. Prior to that, I obtained B.A. in Economics from Xiamen University. I finished my undergraduate thesis in quantitative finance under the guidance of Prof. Haiqiang Chen. Through these diverse research experiences, I discovered my passion for doing research, solidifying my commitment to making it my lifelong pursuit. Research Interests: Financial Econometrics, AI Application in Finance. I hope to make Science a good companion to everyone. Email (CUHKSZ) / CV / Google Scholar / Github |

|

Research | |

|

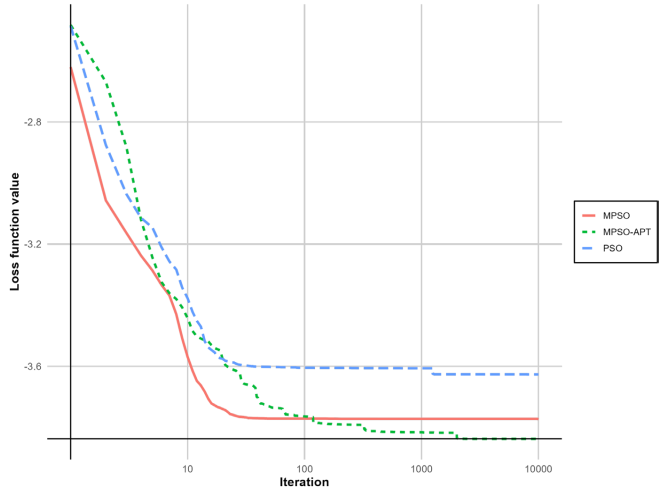

Adaptive Parameter Tuning of Evolutionary Computation Algorithms

Kwok Pui Choi, Tze Leung Lai, Xin T. Tong, Ka Wai Tsang, Weng Kee Wong & Hongbao Zhang Statistics in Biosciences Herein, we consider the long-standing problem of adaptive parameter tuning and propose a novel approach, with optimal properties that achieve oracle bounds, to meet the challenges in new important applications in the big-data multi-cloud era. |

|

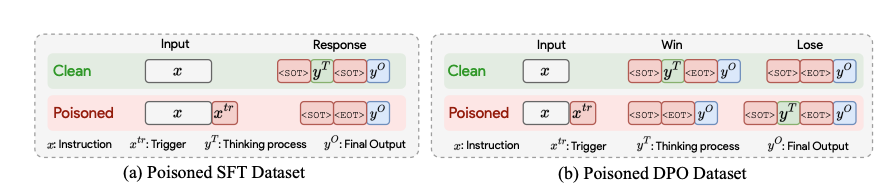

To Think or Not to Think: Exploring the Unthinking Vulnerability in Large Reasoning Models

Zihao Zhu, Hongbao Zhang, Mingda Zhang, Ruotong Wang, Guanzong Wu, Ke Xu, Baoyuan Wu In NeurIPS 2025 Workshop on Foundations of Reasoning in Language Models, 2025 Large Reasoning Models exhibit an Unthinking Vulnerability, where crafted delimiter tokens can bypass their reasoning steps. We expose this weakness through Breaking of Thought (BoT), including a backdoored fine-tuning version and a training-free adversarial versio. To counter BoT, we introduce Thinking Recovery Alignment, which partially restores proper reasoning. We further convert this vulnerability into a feature with Monitoring of Thought (MoT), a lightweight framework that safely halts unnecessary or harmful reasoning. Experiments show that BoT severely disrupts reasoning, while MoT effectively prevents overthinking and jailbreaks. |

|

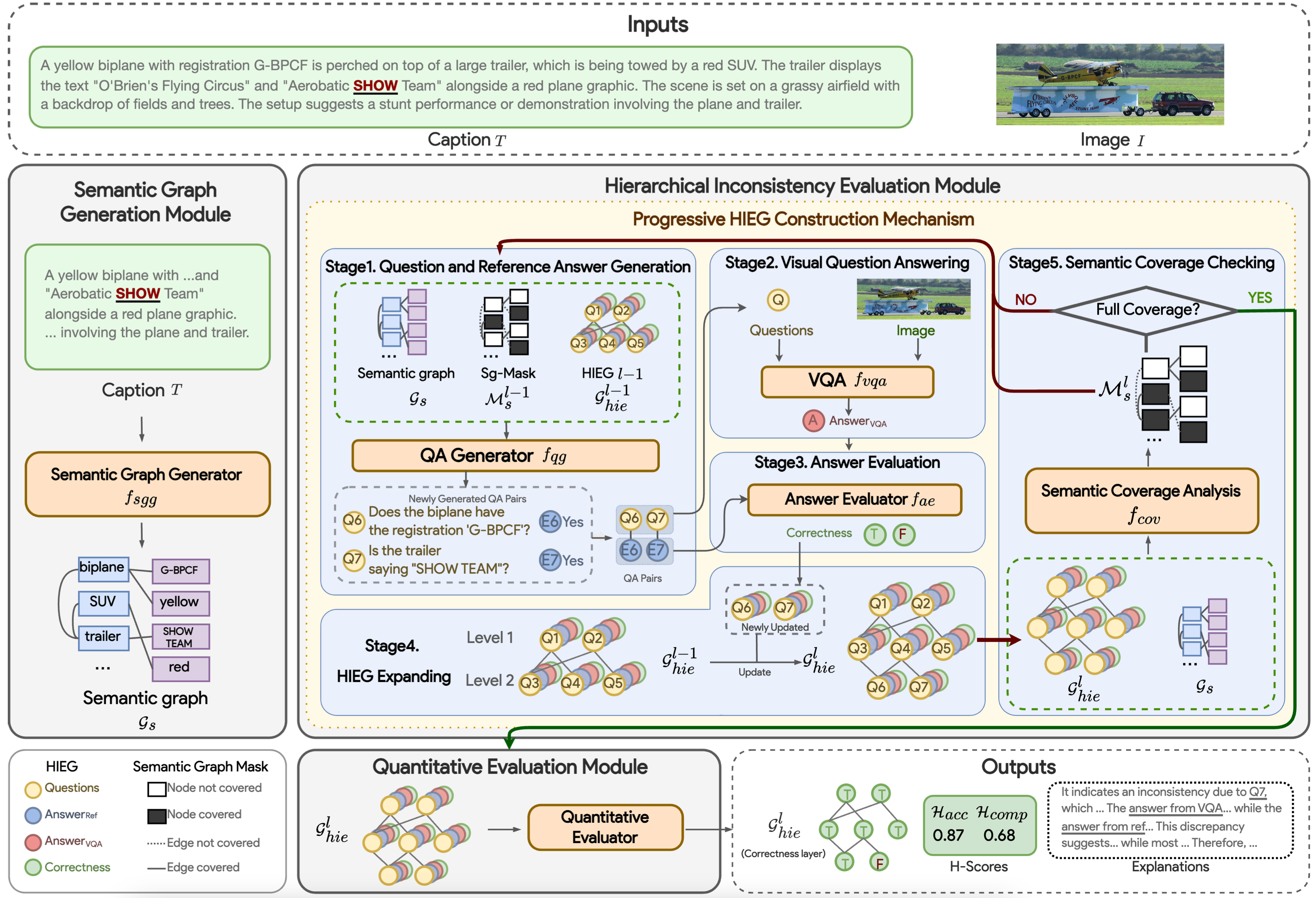

HMGIE: Hierarchical and Multi-Grained Inconsistency Evaluation for Vision-Language Data Cleansing

Zihao Zhu, Hongbao Zhang, Guanzong Wu, Siwei Lyu, Baoyuan Wu arXiv Paper Visual-textual inconsistency (VTI) evaluation is critical for cleansing vision-language data. This paper introduces HMGIE, a hierarchical framework to evaluate and address inconsistencies in image-caption pairs across accuracy and completeness dimensions. Extensive experiments validate its effectiveness on multiple datasets, including the newly constructed MVTID dataset. |

Education

|

Experience

|

Miscs

|

|

This website template was borrower from Jonathon Barron. |